If you Google the word Phoneme, you will get around 1,13,00,000 results in mere 0.51 seconds. Wikipedia defines Phoneme as one of the units of sound that distinguishes one word from another in a particular language. But what does it mean to Sharath Adavanne, Research Scientist at Zapr?

During our annual tech conference, Sharath explained how Phoneme can be effectively used to train machines for synthesizing audio in multiple languages without compromising on the natural accent of actors. And to bring light into his efforts, we bring you the second blog of the ‘UNBLT series’ which will highlight how phonemes render a viable road to dub media content into multiple languages.

What is Audio?

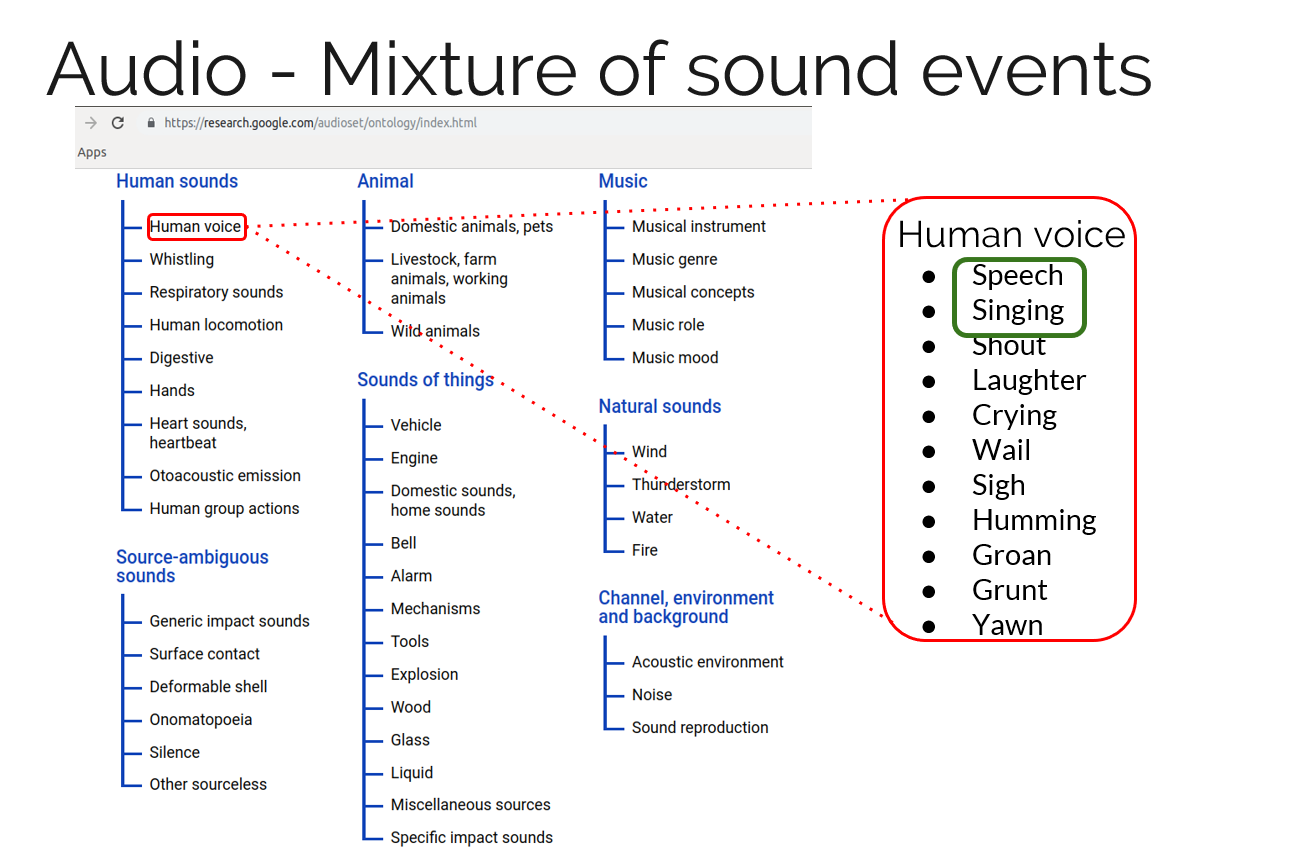

An audio recording is basically composed of a mixture of different sound events. In our day-to-day life, we come across a wide variety of sound events such as car honk, people speaking, and music. Recently, researchers from Google made an effort to categorize a wide variety of these sound events into distinct categories and hierarchies.

Among them, speech and music were deemed as the most studied sound events. However, these sound events - music and speech, form a super small subset of all the possible sound events in the world. The primary reason for this is that both speech and music are the most commonly used form of expressions for humans and both of them are highly-structured data. To shed more light on it, let me give you an example. In speech, we know the word ‘WHY’ is mostly used for asking questions. And when it comes to music, there are twelve basic notes and all of the music is a combination of it.

At Zapr, we are not only exploring speech sound-event related technology such as automatic speech recognition (ASR) and text to speech (TTS), but we go beyond speech and music to work on algorithms that can recognize the different sound events in the environment where the audio is being recorded and this technology is commonly known as machine listening.

The Pivotal role of Machine Listening

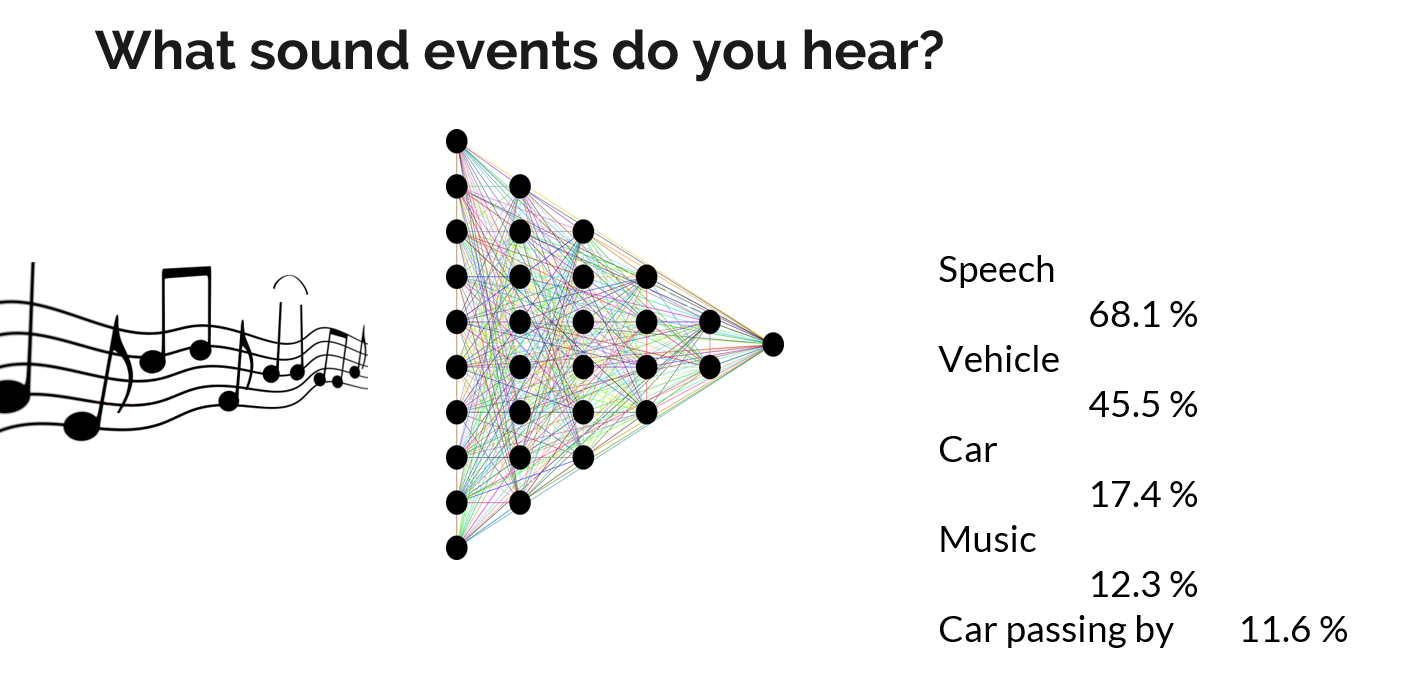

The machine listening technology enables us to transcribe sound events such as speech, music, traffic, shouting, and claps in the multimedia content. You would have seen this kind of information in the subtitles of recent movies and series on YouTube and Netflix. Additionally, this technology also enables us to comprehend the sound environment of audio recordings in an optimal manner. For example, imagine a scenario where you are driving your car with your friends and listening to some Pink Floyd music. Given just an audio excerpt from this scene, the aim of the AI-powered machine listening algorithm is to guess the scenario. The output of our AI-powered machine listening algorithm for the above scenario is shown below.

It not only guessed that there was music, speech and car sounds, but it also guessed that there were other cars passing by which is a common scene in any traffic scene. Further, ZAPR’s state-of-the-art automatic content recognition (ACR) technology can easily detect the music being played in the car is of Pink Floyd. All this information about the user including Pink Floyd music, sounds made by the car, spoken language, and their gender can potentially be employed for profiling the user for advertising.

That is awesome, but why is Zapr doing ASR and TTS?

Before we answer that, let us first define what ASR and TTS is

- ASR: Given an audio recording with speech in it, the ASR technology enables us to recognize the text which is being spoken in the recording.

- TTS: Given the text, speaker gender and emotion, the TTS technology enables us to synthesize speech with the said gender emotion and text.

Now let us talk about why ZAPR is doing this….

We firmly believe that language should not undermine the reach of media consumption. Our goal is to ensure that a person who does not understand Hindi should be able to enjoy Bollywood content in his/her own language. This can be powered with ASR and TTS technologies in different ways. Firstly, ASR can be leveraged to obtain Hindi subtitles of a Bollywood content for both native and amateur Hindi speakers. Next, Hindi subtitles can be translated into regional languages to entice viewers who do not understand Hindi. And lastly, tapping into the potential benefits of TTS technology, we use the regional language subtitles to synthesize speech that can create a seamless media consumption experience. In a nutshell, these three processes create an 'automatic dubbing system' that can potentially translate media content between any languages.

A Unique Approach to train Machines

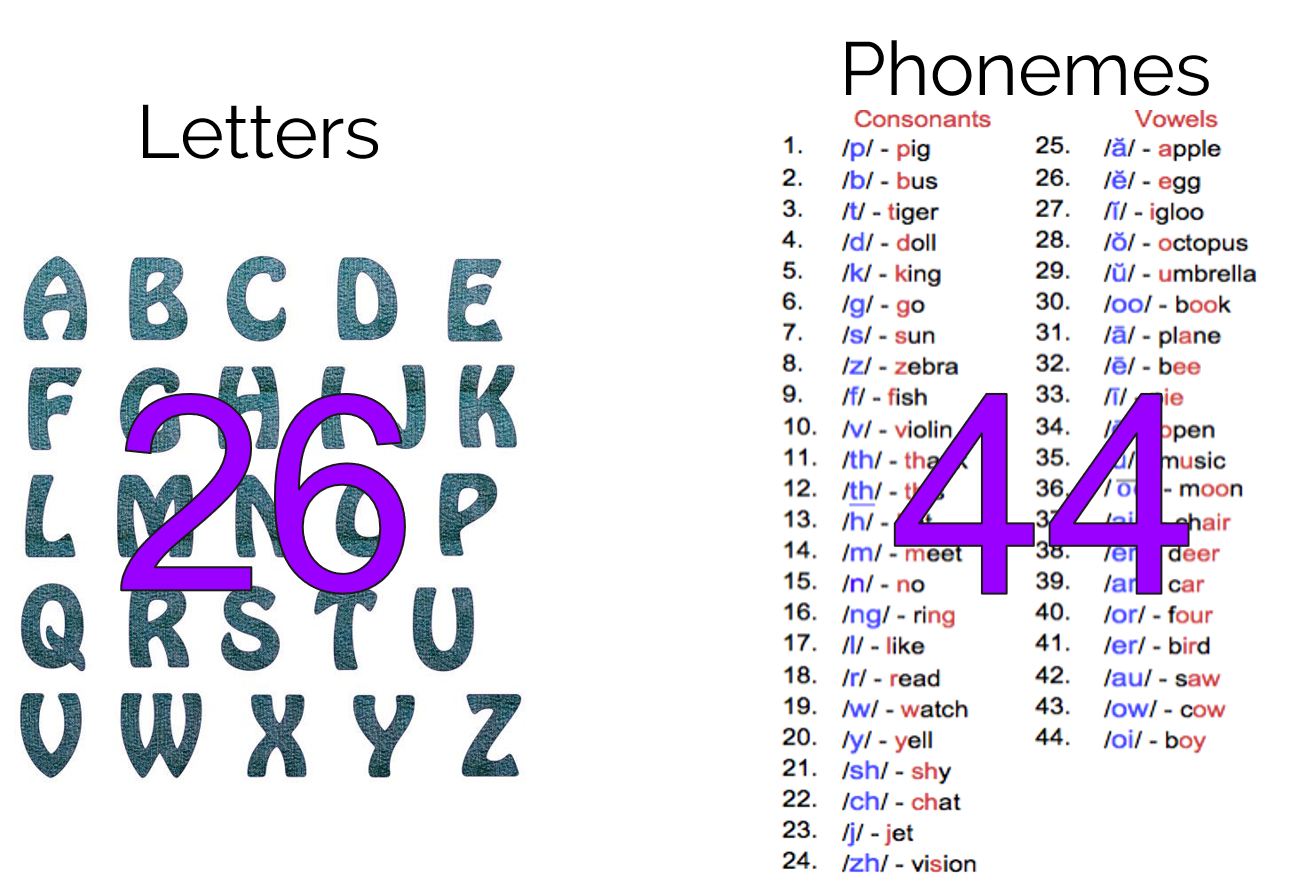

As pointed by Sharath, languages such as English are terrible at being expressive. For example, the letter C is pronounced differently in the word CAT and CHAT and this information is learned only with experience. But what if you come across a new word with C that you have never heard of, how would you pronounce it? Sounds complicated, right? That is where phonemes come into the picture. Although American English has 26 letters it has 44 unique phonemes. Since phonemes are unique sounds, given the phoneme representation of a word you can easily pronounce the word with no confusion.

For example, the same words CAT and CHAT are written as K-AE-T and CH-AE-T in phonemes where the pronunciation of the letter C is explicitly different in both the cases. Similarly, while building our ASR and TTS technologies we learn the mapping between each phoneme in the text to a corresponding sound in the audio. So, with our proprietary tech, we are working to enhance the understanding capability of machines and subsequently produce natural and more robust speech recognition and synthesis.

Zapr is hiring!

If you're a researcher or student who is interested in the fields of Digital Signal Processing, Audio Content Recognition, Automatic Speech Recognition, Speech Synthesis, Music Information Retrieval and Computer Vision, do send your resume to ps@zapr.in.