The world of Technology is observing a paradigm shift in the way users interact and consume data or “experiences” (as it is being famously called these days). We at Zapr strive to provide the next-gen experience to our clients and users.

To achieve this objective, we came up with an Augmented Reality (AR) experience for our users on the mobile web. The goal of this exercise was:

- To achieve similar AR experience as provided by native apps without the overhead of installing mobile apps.

- Provide a seamless user experience.

- Keep the user engaged with the content.

- Render the experience instantly, without any delay.

This blog post will provide a first hand experience in selecting the framework that we used for our AR application, the comparisons with major available frameworks and the scope for future work. So let’s get started.

Web AR Frameworks

A-Frame + AR.js

Unlike native mobile apps, developing AR on the web doesn’t have a lot of frameworks to begin with. One major framework which provides basic AR features for the mobile web is A-Frame. A-Frame is an open-source web framework for building Virtual Reality (VR) experiences, primarily maintained by Mozilla and the WebVR community. A-Frame coupled with AR.js provides us with AR experience on the mobile web. This is by far the best framework available right now to implement AR on the mobile web.

The underlying concept by which A-Frame + AR.js provides Augmented Reality experience is that it uses predetermined marker files which when detected by the mobile camera will be replaced with any 3D object configured by the user. A sample marker file is shown below:

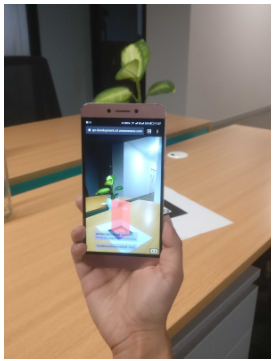

So when we open our web app and point the camera to the above marker file, we will see it as shown below:

Limitations

In our case, we might not expect all our users to have a marker file with them. Moreover, the scope would be limited to just that marker file which was predefined.

Approach

Hence, we came up with an alternative approach. We are now using the user’s camera to show live surroundings and objects placed randomly in the scene. After that, we add ‘click listeners’ on these objects. So whenever a user interacts with these objects, we can perform any action on them like show a message, popup, redirect to a landing page, provide rewards/coupons etc. A-Frame is used to load 3D objects in .gltf format. There are a number of open source GLTF files available online, as well as open source converters and optimizers. Let’s get into the detail:

Code Snippet

Note: Make sure aframe.js and aframe-ar.js files are included in your source.

To make the camera available for our web application, we add this to our HTML file

<a-scene embedded artoolkit=”sourceType: webcam;”>

Instantiate the camera with the appropriate position.

<a-camera position=”0 1.6 5″ user-height=”1″>

</a-camera>

To include the 3D object

<a-asset-item id=”cocaCola” src=”https://zapr-ad-creatives.s3.amazonaws.com/gltf/coke/scene.gltf“></a-asset-item>

Refer to the above 3D object entity in the body of the HTML

<a-entity position=”3 1 0″ id=”cocaColaRunner” look-controls=”reverseMouseDrag:true” gltf-model=”#cocaCola” scale=”0.2 0.2 0.2″></a-entity>

Other Frameworks

Three.js

Three.js is a library to to create and display 3D objects on the browser. Three.js is a very powerful framework which provides a lot of features like Scenes, Camera, Animation, Effects etc. It has a very steep learning curve (compared to A-Frame) owing to its complex feature availability.

Tracking.js

Tracking.js primarily focuses on providing computer vision techniques on the browser. Some of the features available on Tracking.js are

- Color tracking (Static images, Live camera, Videos)

- Face detection (Static images, Live camera)

Tracking.js provides an enclosed boundary (based on the above criterion) on the scene which can then be used to provide further interactivity to the users.

Annyang

Annyang provides voice enabled user interaction on the web application. It’s a very light weight library and supports multiple languages. We can even program it to read certain keywords from a voice-command and perform the corresponding operations.

For example, we could have something like below:

‘show me *tag’: showFlickr,

Using Annyang, whenever the user says something like “Show me cats” the showFlickr function would be invoked with “cats” as an argument. Thus, the corresponding operation would be performed.

JSARToolkit

JSARToolkit is similar to A-Frame in that it provides support for marker detection, adding 2D canvas or WebGL on top of marker etc. JSARToolkit uses the Three.js framework as a dependency.

Conclusion and Future work:

Still in its infant stage, AR on the mobile web allows developers to harness the basic capabilities of an AR experience without the overhead of using native apps. This enables them to develop a quick prototype to validate their ideas and analyse how users interact with it. From our experience, we observed A-Frame + AR.js helps us to quickly develop an AR ad which has a higher interaction rate.

As more capabilities open up for using AR on the mobile web, we believe this will bridge the gap between mobile web applications and native apps. AR coupled with VR provides something known as “Mixed Reality” which aims to fuse digital and real worlds for an entirely new experience. With mobile processors getting faster by the day, it may not be too far off to when we will be interacting with a variety of AR experiences just like we interact with images and videos today.